Mark Zuckerberg’s Meta, on Friday, said that it was releasing a series of new AI models from its research division – Fundamental AI Research (FAIR). These models include a ‘Self-Taught Evaluator’ that could likely offer the possibility of less human involvement in the entire AI development process, and another model that freely mixes text and speech.

The latest announcements come after Meta’s paper in August that detailed how these models would rely on the ‘chain of thought’ mechanism, something which has been used by OpenAI for its recent o1 models that think before they respond. It needs to be noted that Google and Anthropic, too, have published research on the concept of Reinforcement Learning from AI Feedback. However, these are not yet out for public use.

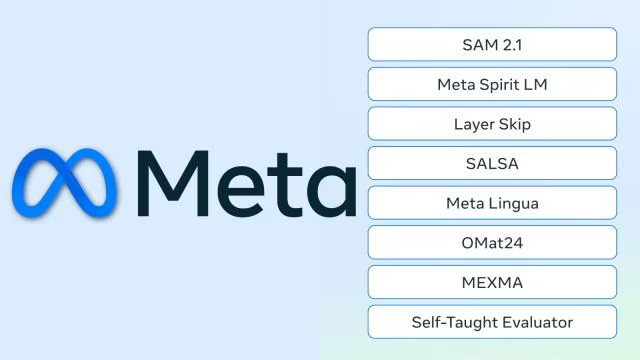

Meta’s group of AI researchers under FAIR said that the new releases support the company’s goal of achieving advanced machine intelligence while also supporting open science and reproducibility. The newly released models include updated Segment Anything Model 2 for images and videos, Meta Spirit LM, Layer Skip, SALSA, Meta Lingua, OMat24, MEXMA, and Self Taught Evaluator.

Mark Zuckerberg’s Meta, on Friday, said that it was releasing a series of new AI models from its research division – Fundamental AI Research (FAIR). These models include a ‘Self-Taught Evaluator’ that could likely offer the possibility of less human involvement in the entire AI development process, and another model that freely mixes text and speech.

The latest announcements come after Meta’s paper in August that detailed how these models would rely on the ‘chain of thought’ mechanism, something which has been used by OpenAI for its recent o1 models that think before they respond. It needs to be noted that Google and Anthropic, too, have published research on the concept of Reinforcement Learning from AI Feedback. However, these are not yet out for public use.

Meta’s group of AI researchers under FAIR said that the new releases support the company’s goal of achieving advanced machine intelligence while also supporting open science and reproducibility. The newly released models include updated Segment Anything Model 2 for images and videos, Meta Spirit LM, Layer Skip, SALSA, Meta Lingua, OMat24, MEXMA, and Self Taught Evaluator.